With support for natural language commands, it allows intuitive and hands-free control of robotic movements.

Google DeepMind has taken a major leap in robotics with the launch of Gemini Robotics On-Device, a new variant of its Google Gemini AI model tailored for use in physical robots. Unlike traditional AI models that require cloud access, this version is designed to run offline, making robots more self-reliant and responsive even without internet connectivity.

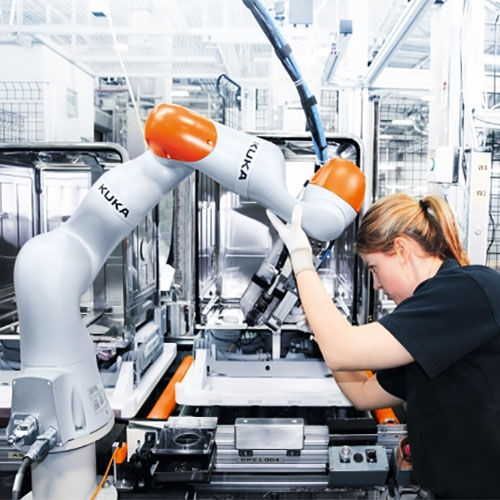

As part of Google’s robotics vision, the Gemini AI robotics model was tested on bi-arm robots, demonstrating the ability to perform intricate tasks such as folding clothes, unzipping bags, and handling real-world objects. What sets this version apart is its lightweight design, optimized to function using minimal computational power while still offering advanced task execution capabilities.

Crucially, the Google AI for robots includes natural language support, enabling intuitive and voice-controlled robotic interactions. Users can instruct robots in plain English, allowing for hands-free operation in household or industrial settings.

This advancement is expected to reshape how AI is integrated into robotics, with applications spanning home automation, warehousing, eldercare, and more. By eliminating the dependency on cloud infrastructure, Gemini AI 2025 brings us closer to smarter AI robots capable of functioning autonomously, efficiently, and securely in real-world environments—without latency, bandwidth limitations, or privacy concerns.

With Gemini AI’s offline capabilities, Google reinforces its ambition to embed advanced intelligence directly into machines, pushing the boundaries of what's possible with self-reliant robots and setting a strong foundation for the future of AI-powered automation.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.