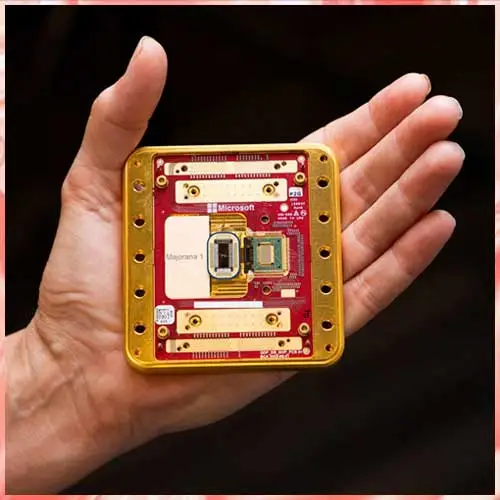

Microsoft has announced a significant leap in quantum computing with the development of the Majorana 1 chip, which utilizes a new state of matter known as a topological superconductor.

This revolutionary technology could pave the way for the world's most powerful quantum computers, a milestone that many believed was still decades away.

The Majorana 1 chip, built using indium arsenide and aluminum, employs a superconducting nanowire to detect and control Majorana fermions, subatomic particles theorized in the 1930s. These particles have unique properties that make them highly resistant to errors—one of the biggest challenges in quantum computing.

Microsoft claims this approach will allow for the creation of quantum computers with a million qubits on a single chip, significantly outperforming today’s most advanced machines.

Unlike conventional quantum computers, which require a large number of error-prone qubits, Microsoft's technology aims to use fewer, more stable qubits to achieve the same computational power.

This could revolutionize fields like drug discovery, cryptography, healthcare, and advanced manufacturing by solving complex problems beyond the capabilities of classical computers.

Microsoft’s breakthrough was published in the scientific journal Nature, with executives emphasizing that scalable, practical quantum computers are now "years, not decades" away.

Despite the potential benefits, experts warn that quantum computing could break modern encryption systems, posing security risks to sensitive data, including national secrets.

Harvard physicist Philip Kim hailed Microsoft’s work as an "exciting development," noting that the company’s approach—blending traditional semiconductors with exotic superconductors—offers a promising path toward scalable quantum chips.

Although the technology is still in its early stages, Microsoft believes its "high-risk, high-reward" strategy will soon redefine computing as we know it.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.