Breaking News

While blocking competitive use, Anthropic confirmed OpenAI may retain limited API access for benchmarking and safety evaluations as per industry standards.

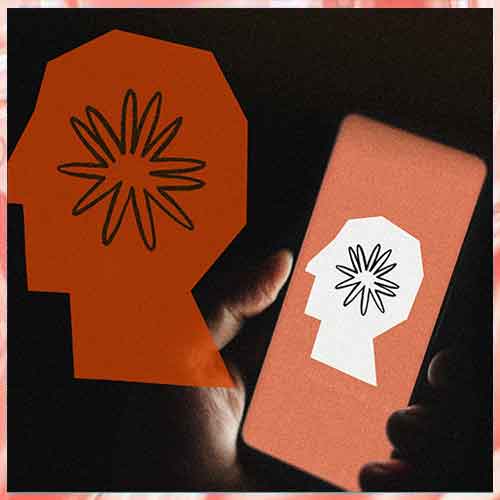

In a major development in the AI industry, Anthropic has revoked OpenAI’s access to its Claude AI models, accusing the ChatGPT-maker of violating its terms of service. According to media reports, OpenAI allegedly used Claude’s API for internal testing that went beyond the permitted purposes, including evaluating coding, creative writing, and safety responses as part of its preparations for the highly anticipated GPT‑5 launch.

This move comes at a critical time for OpenAI, which is gearing up to unveil GPT‑5, expected to be one of the most advanced large language models (LLMs) in the industry. By cutting off OpenAI’s access, Anthropic — the developer behind Claude, one of the strongest ChatGPT competitors — is sending a strong signal about protecting its intellectual property and maintaining competitive boundaries.

Despite the restriction, Anthropic confirmed that OpenAI may retain limited API access for benchmarking and safety evaluations, in line with standard industry practices. This means OpenAI can still use Claude for non-competitive purposes, such as comparing safety and performance benchmarks, but cannot integrate or test Claude in ways that could aid in developing competing products.

This development highlights the intensifying competition in the AI sector, where major players like OpenAI, Anthropic, Google DeepMind, and Meta are in a race to dominate artificial general intelligence (AGI) development. The incident also raises important questions about AI ethics, data usage policies, and the need for clearer guidelines on API access between competing organizations.

For OpenAI, this could pose challenges in training and testing GPT‑5, as access to rival models helps fine-tune safety and performance benchmarks. For Anthropic, it reinforces its stance on safeguarding its Claude AI ecosystem as it continues to grow its presence in enterprise and consumer AI applications.

As the countdown to GPT‑5 begins, this OpenAI–Anthropic conflict underscores the high-stakes battle for AI dominance, where data, access, and partnerships will shape the future of next-generation AI models.

This move comes at a critical time for OpenAI, which is gearing up to unveil GPT‑5, expected to be one of the most advanced large language models (LLMs) in the industry. By cutting off OpenAI’s access, Anthropic — the developer behind Claude, one of the strongest ChatGPT competitors — is sending a strong signal about protecting its intellectual property and maintaining competitive boundaries.

Despite the restriction, Anthropic confirmed that OpenAI may retain limited API access for benchmarking and safety evaluations, in line with standard industry practices. This means OpenAI can still use Claude for non-competitive purposes, such as comparing safety and performance benchmarks, but cannot integrate or test Claude in ways that could aid in developing competing products.

This development highlights the intensifying competition in the AI sector, where major players like OpenAI, Anthropic, Google DeepMind, and Meta are in a race to dominate artificial general intelligence (AGI) development. The incident also raises important questions about AI ethics, data usage policies, and the need for clearer guidelines on API access between competing organizations.

For OpenAI, this could pose challenges in training and testing GPT‑5, as access to rival models helps fine-tune safety and performance benchmarks. For Anthropic, it reinforces its stance on safeguarding its Claude AI ecosystem as it continues to grow its presence in enterprise and consumer AI applications.

As the countdown to GPT‑5 begins, this OpenAI–Anthropic conflict underscores the high-stakes battle for AI dominance, where data, access, and partnerships will shape the future of next-generation AI models.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.