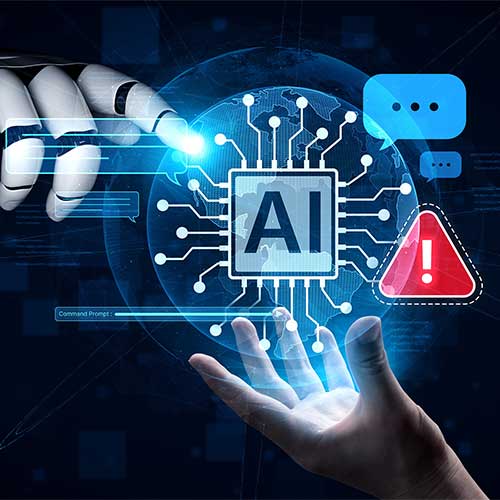

Cybercriminals have weaponized DAN-style jailbreak prompts to bypass AI safety restrictions, enabling them to generate illicit content more effectively and at scale. These jailbroken AI models produce phishing emails, social engineering attacks, fake invoices, and malware creation guides with enhanced speed and sophistication, often making these attacks more convincing and harder to detect.

By exploiting DAN prompts, cybercriminals can coerce AI systems to produce content against policy, including counterfeit credentials, deceptive narratives, and hacking assistance that would otherwise be blocked by the AI's ethical safeguards. These jailbreaks are openly discussed and traded on underground cybercrime forums, often packaged with support and continuous improvements, fueling an underground economy for malicious AI use.

This weaponization accelerates cyber threats by enabling attackers to automate and personalize attacks that trigger human psychological responses, increasing success rates in phishing and fraud. Moreover, some threat actors use AI to produce deepfakes and misinformation rapidly, complicating detection and response.

Overall, DAN-style jailbreaks exacerbate risks in cybersecurity by removing AI content controls, amplifying attack scale, and challenging defenders to adapt to increasingly automated and evasive threat vectors in the digital landscape

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.