AI-assisted coding and app-generation platforms are accelerating software development at an unprecedented pace.

As organisations deploy more applications and update them more frequently, security and privacy teams are struggling to keep up.

While application footprints are expanding rapidly, security resources remain largely unchanged, creating serious exposure risks.

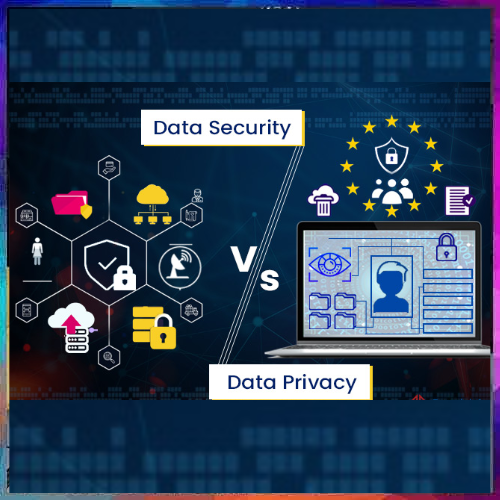

Traditional data security and privacy tools are too reactive for this new environment. Most operate only after data reaches production, when sensitive information may already be exposed.

They often miss hidden data flows to third-party services and AI integrations, and while they can detect issues, they rarely prevent them.

A more effective approach is to embed privacy and security controls directly into the development process.

One of the most common failures is the exposure of sensitive data in application logs.

Simple coding oversights—such as logging entire user objects or tainted variables—can result in costly breaches that take weeks to remediate.

As development teams scale, these risks multiply.

Outdated data maps present another major challenge.

Privacy regulations such as GDPR require accurate documentation of how personal data is collected, processed, and shared.

Manual, interview-based workflows quickly become unreliable in fast-changing environments.

AI experimentation adds further complexity.

Developers often introduce AI SDKs without oversight, sending data to external models without proper legal or privacy controls.

Without proactive enforcement at the code level, organisations are left reacting to risks after the damage is already done.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.