FaceOff in Mental Health AI Promise, Progress, and Precautions in Sensitive Human-Centric Use Cases

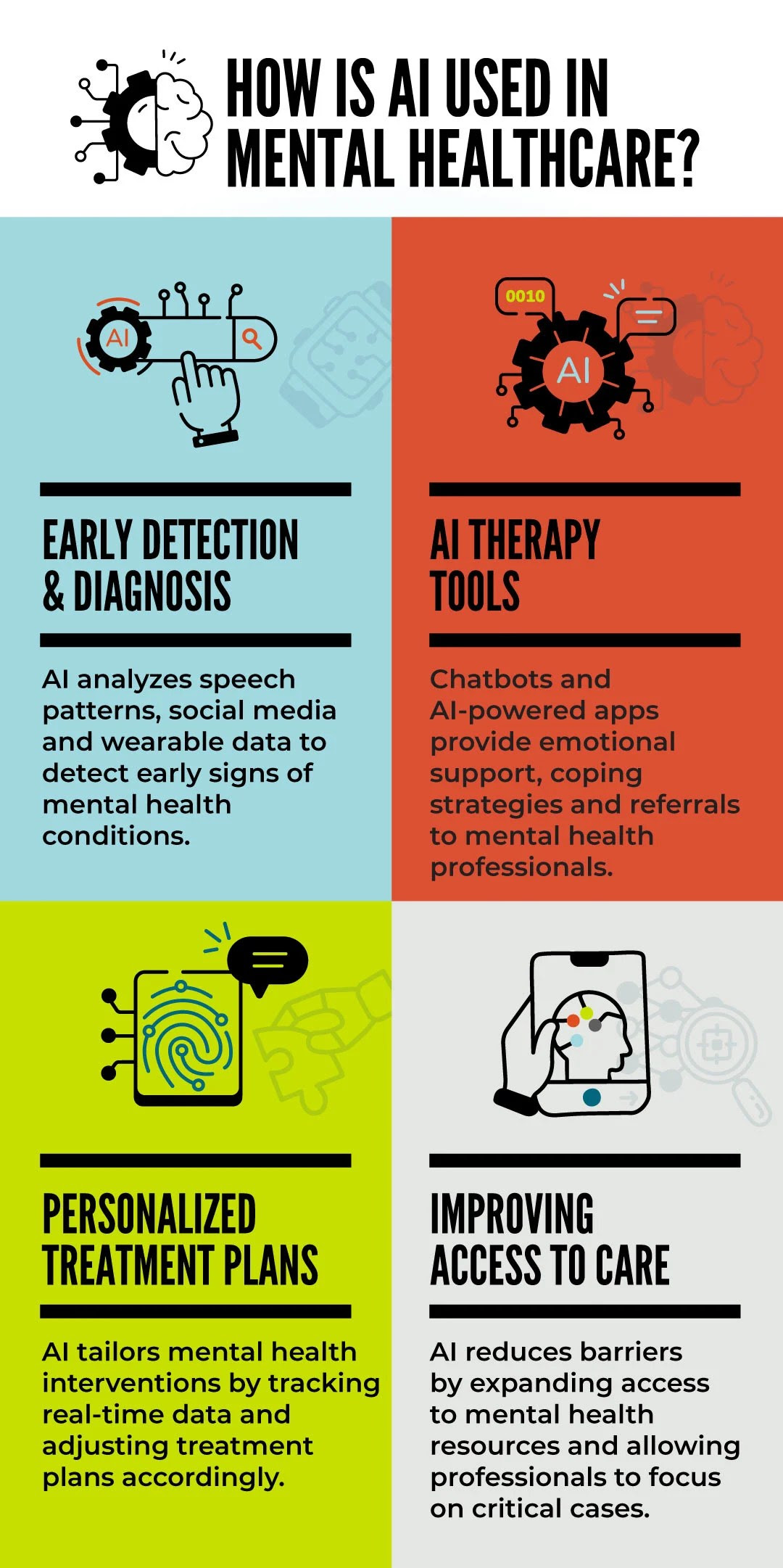

Mental Health AI is emerging as one of the most impactful yet sensitive applications of artificial intelligence. As global demand for mental health support continues to rise amid shortages of trained professionals, technology platforms must strike a careful balance between innovation, ethics, and human dignity. In this landscape, FaceOff offers a responsible, augmentation-focused approach to applying AI in mental health contexts.

Rather than attempting to diagnose or replace clinicians, FaceOff is designed to support early awareness, risk signaling, and secure human intervention, making it well suited for mental health use cases where trust, privacy, and accuracy are paramount.

The Promise: Where FaceOff Adds Value

FaceOff leverages advanced AI techniques to analyze observable behavioral signals—such as facial expressions, micro-movements, engagement patterns, and temporal changes—within clearly defined and consent-based environments. When applied thoughtfully, this capability can contribute to mental health support in several meaningful ways:

1. Early Risk Indication, Not Diagnosis

FaceOff can help identify deviations from an individual’s baseline behavior, such as prolonged disengagement, emotional flatness, heightened stress indicators, or fatigue patterns. These indicators can serve as early warning signals for burnout, anxiety, or emotional distress—prompting timely human review rather than automated conclusions.

2. Support in Workplaces and Campuses

In organizational or educational settings, FaceOff can be integrated into wellness programs to help identify aggregate trends such as rising burnout risk or chronic stress across teams or cohorts—without exposing individual identities unnecessarily. This enables leadership and counselors to intervene proactively with supportive resources.

3. Augmentation for Mental Health Professionals

For clinicians, counselors, or psychologists, FaceOff can act as a decision-support layer, offering longitudinal insights across sessions—highlighting changes in engagement or emotional responsiveness that might otherwise be subtle or overlooked. Importantly, all interpretation and action remain with trained professionals.

The Progress: Responsible AI Design in Practice

Mental health is deeply contextual, cultural, and personal. FaceOff addresses this reality through design principles that prioritize caution over automation:

● Contextual analysis rather than one-size-fits-all emotional labeling

● Baseline comparison over time instead of absolute judgments

● Human-in-the-loop workflows where AI flags are reviewed, not enforced

● Explainable indicators that show what changed rather than what to conclude

By focusing on patterns and trends instead of diagnoses, FaceOff reduces the risk of misinterpretation, especially across diverse populations, languages, and neurodiverse individuals.

The Precautions: Privacy, Trust, and Ethics by Design

Mental health data is among the most sensitive forms of personal information. FaceOff is designed to operate within strict ethical and governance boundaries, addressing the core risks associated with Mental Health AI.

1. Consent and Transparency

FaceOff deployments must be explicitly consent-driven, with clear communication on:

● What data is analyzed

● What insights are generated

● Who can access the results

● What actions may follow

There is no covert monitoring or secondary use of data.

2. Data Minimization and Protection

FaceOff emphasizes:

● Minimal data retention

● Secure encryption of all sensitive information

● Role-based access controls

● Clear audit trails for accountability

This ensures that emotional or behavioral data is not misused, repurposed, or exposed.

3. No Replacement of Human Empathy

FaceOff is not a therapist, counselor, or diagnostic engine. It does not attempt to simulate empathy or clinical judgment. Instead, it functions as a signal amplifier, helping humans notice when care or conversation may be needed.

Governance and the Path Forward

As regulation struggles to keep pace with AI innovation, platforms like FaceOff demonstrate how self-imposed governance, clinical alignment, and ethical restraint can lead responsible adoption. Clear boundaries are maintained between:

● AI-assisted observation

● Human clinical assessment

● Professional decision-making

This alignment supports compliance with emerging data protection laws, workplace ethics standards, and healthcare accountability frameworks.

Conclusion: Augmentation, Not Commodification

The future of Mental Health AI depends not on how much it can automate, but on how well it respects human vulnerability. FaceOff exemplifies a model where AI augments care rather than commodifies it—supporting early awareness, expanding access to support systems, and strengthening human decision-making.

Used responsibly, FaceOff can help democratize mental health support while preserving dignity, privacy, and trust. Used recklessly, such technologies risk eroding the very care they aim to enhance.

The difference lies in design, governance, and intent—and FaceOff places humans firmly at the center of care.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.