Privacy - once meant walls, locks, and permissions. But with agentic AI—systems that perceive, decide, and act autonomously—privacy is shifting from control to trust.

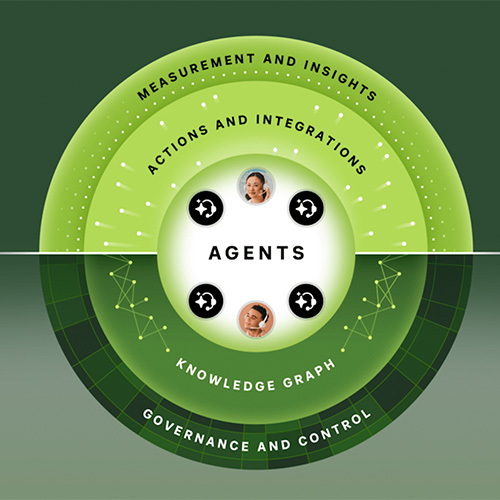

The AI agents don’t just process data; they interpret it, infer patterns, and adapt, building evolving models of both the world and of us.

This raises concerns beyond the traditional Confidentiality, Integrity, and Availability triad.

We now require authenticity (can the agent be verified?) and veracity (can its judgments be trusted?).

Trust becomes fragile when mediated by machine intelligence.

Unlike human advisors bound by ethical or legal standards, AI assistants operate without such safeguards.

Their records could be subpoenaed, audited, or exploited, turning intimate exchanges into potential evidence.

Without protections like “AI-client privilege,” trust risks becoming regret.

Already, agentic AI routes traffic, manages financial portfolios, and shapes digital identities.

It remembers forgotten details, fills gaps, and sometimes shares more than intended—helpfully or recklessly.

Emerging technologies like Agentic RAG extend this further.

By combining Retrieval-Augmented Generation with cognitive reasoning layers, these systems deliver context-aware, proactive, and explainable responses.

Adaptive Agentic RAG goes further, dynamically adjusting retrieval, reasoning, and response styles.

This evolution promises trustworthy digital assistants, smarter governance, and resilient AI ecosystems, redefining innovation and collaboration in the real world.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.