Cyber attackers are using artificial intelligence to scale phishing and deception faster than organisations can train employees to respond, exposing a widening gap in people security, according to the Threatcop People Security Report 2025.

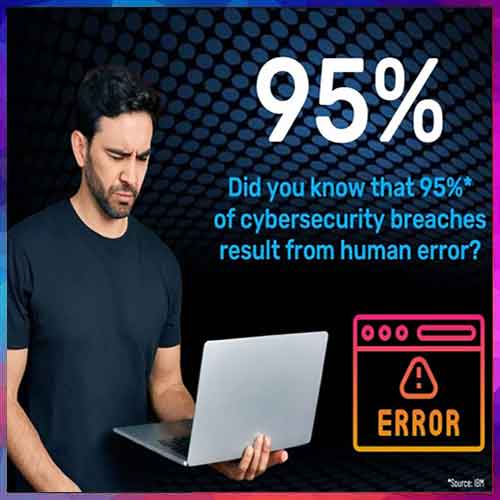

The report finds that static security awareness programmes now show negligible retention against AI generated phishing campaigns that continuously adapt tone, timing, and targeting. As a result, human error remains the root cause of 95% of cyber breaches, despite growing investments in technical security tools.

The study highlights that attackers are increasingly relying on social engineering and credential misuse over traditional software vulnerabilities. This strategy is lucrative. Business Email Compromise alone led to a global loss of 3 billion dollars in 2023, underscoring how behaviour-driven attacks translate directly into significant financial damage.

The report also examines what CISOs refer to as the “golden hour”, the short period between initial compromise and detection where most losses occur. AI-driven attacks enable threat actors to move faster during this window, while organizations struggle to recognise early behavioural indicators that signal breach activity.

Threatcop notes that defending against these attack techniques now requires the use of AI within enterprises to simulate real-world phishing and deception at scale. CISOs contributing to the report said that without adaptive testing and continuous feedback loops, traditional training models fail to prepare employees for modern threat patterns.

The risk is most acute in regulated sectors. The report finds that 95 per cent of attacks on financial institutions involve a human element, amplifying pressure on banks and insurers to strengthen people-centric controls as AI accelerates attack cycles and reduces the time available for detection.

Commenting on the findings, Pavan Kushwaha, CEO, Threatcop & Kratikal, said, “AI has changed the economics of social engineering. Attackers can now test, refine and deploy deception at a scale that manual training methods were never designed for. Our findings show that organisations need to move from periodic awareness sessions to continuous, AI driven testing that reflects how real attacks unfold. Without that shift, the gap between compromise and detection will continue to widen.”

The Threatcop People Security Report 2025 is being shared with security leaders across India and overseas markets, with a broader public release planned in the coming months. The findings are expected to guide how enterprises recalibrate people security strategies, budget priorities and detection models as AI increasingly shapes both attack methods and cyber defence planning for 2026.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.