Fake AI Add-Ons Breach 260K

A sprawling campaign of bogus AI browser tools has compromised more than 260,000 users, according to researchers at LayerX. The extensions masqueraded as productivity enhancers while secretly siphoning sensitive information.

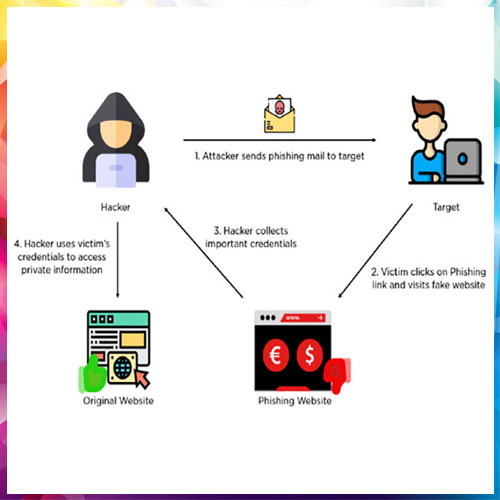

Distributed through Google Chrome, the plug-ins promised translation, summarisation and chatbot features. Instead, they created a covert channel between victims and attacker-controlled servers.

The technical twist lies in remote iframes. Rather than embedding malicious payloads directly, the add-ons loaded interfaces from external domains, making them appear harmless during store inspection.

Because the operational logic lived remotely, criminals could alter behaviour at any time. A tool that seemed benign one day could pivot to harvesting credentials the next.

Email was a priority objective. Multiple extensions monitored activity on Gmail, extracting messages, drafts and contact data straight from the browser’s document structure.

The campaign also hunted for developer secrets. When users entered tokens for services such as OpenAI or Anthropic, the information could be intercepted instantly.

Investigators observed rapid re-uploads whenever one listing was removed. Nearly identical copies resurfaced under new identities, frustrating takedown efforts.

Even more troubling, some versions carried trust badges, encouraging installations and lowering suspicion about extensive permission requests.

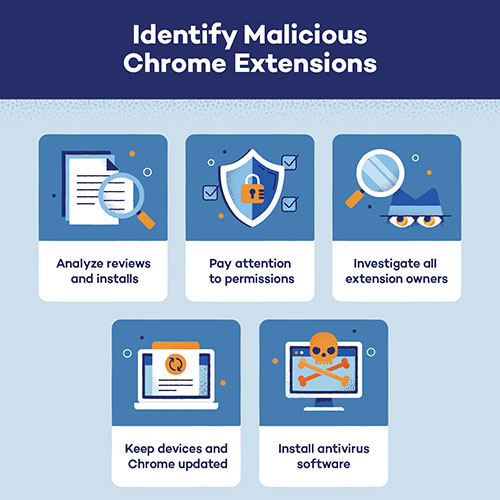

For defenders, reputation checks alone are no longer enough. Security teams must minimise extension privileges, enforce allow-lists and watch for unusual page injections or data flows.

The lesson is stark. Attackers are exploiting enthusiasm around AI to capture the very fabric of digital work. Removing unknown extensions and rotating exposed credentials should be immediate priorities.

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.